Chapter 3 - Verifiable Off-chain Compute: Enabling an Instagram-like experience for Web3

Chapter 3: The Challenger to the Throne (TEE Co-Processor) and the Trust Broker (Crypto-Economic Co-Processor)

Florian Unger (Principal, Florin Digital) | James Burkett (Partner, Florin Digital) | Feyo Sickinghe (Partner, Florin Digital)

A big thank you to Ismael (Lagrange Labs), Esli and Roshan (Marlin), Hang and Marvin (Phala Network), Deli (Automata Network), Guy and Mak (Fhenix) and their teams for invaluable help, review, and feedback.

Chapter 3: The Challenger to the Throne (TEE Co-Processor) and the Trust Broker (Crypto-Economic Co-Processor)

Complete Table of Contents

Chapter 1: What are Co-Processors and why do we need them?

Chapter 2: Understanding the different types of Co-Processors (ZK and Optimistic Co-Processors)

Chapter 3: Understanding the different types of Co-Processors (TEE and Crypto-Economic Co-Processors)

TEE Co-Processor (the Challenger to the Throne)

Case Studies (Marlin, Phala, Automata)

Crypto-Economic Co-Processor (the Trust Broker)

Case Study (Fhenix)

Comparing different verification methodologies

Combining different verification methodologies

Chapter 4: Use cases of Co-Processors

Chapter 5: Open questions on the future of Co-Processors

Executive Summary

Trusted Execution Environments ("TEEs" or “Enclaves”) and Crypto-Economic Co-Processors enable cheap and verifiable off-chain compute solutions for applications.

TEEs enable tamper-proof, confidential compute operations that remove the need for users to trust the computation component of the application.

Due to the low computational cost overhang, TEEs are especially “good” for continuous compute operations, enabling trustless AI agents, trading bots, chatbots and the like.

Restaking platforms like Eigenlayer enable protocols to outsource operations secured by economic trust, providing fast off-chain verification and faster time-to-market.

In practice, we believe different verification methods will likely be combined. This approach leverages the strengths of each method, optimizing for security, performance, and cost-efficiency.

Due to the size and topical relevance of this chapter covering TEEs, we will provide a short recap of what the reader can expect. To begin, we will provide a brief explanation of TEEs, their properties (integrity, confidentiality and attestation), and how they enable confidential, tamper-proof compute. Next, we dive into Zero Knowledge (“ZK”) vs. TEE and we expand on the fixed and variable cost structures of TEE attestation and ZK proving. Following a discussion on the advantages and disadvantages of TEEs and TEE Co-Processors, we conclude this chapter with three case studies on relevant protocols in the space Marlin, Phala, and Automata.

TEE Co-Processor (The Challenger to the Throne)

What are TEEs?

A TEE is a secure computing section of a device or network that is designed to ensure sensitive data is stored, processed, and protected in isolation. Programs running inside a TEE benefit from confidentiality and integrity throughout execution, even if the surrounding system is compromised. TEEs enable secure computation, data privacy, and even confidential smart contracts (meaning a smart contract whose code cannot be accessed). In short, TEEs enable tamper-proof computation.

TEE enclaves are predominantly hardware-based, running locally or in the cloud (via providers such as Microsoft Azure), or in less common cases, software-based. Hardware-based TEEs are directly implemented within the hardware device (Intel SGX, ARM TrustZone, Nvidia Confidential, etc.) relying on the actual physical hardware for security. Software-based TEEs are implemented through software solutions without relying on specialized hardware. These TEEs use software techniques to create secure environments within the existing hardware.

To ensure computational integrity and correctness, applications can outsource computation to TEEs, eliminating the need for users to trust the application's computational processes. Instead, the computations are handled by a TEE, which guarantees their security and accuracy.

While users must still trust the application developer with regards to the integrity of the program, they can be confident that the computational process itself is correct. In a perfectly trustless environment, the integrity of the application developer would also be guaranteed. Projects like Flashbots’ Suave and Circlesmoney’s Quartz (with their TEE Sidecar module) are working towards incorporating a blockchain layer to achieve absolute trustlessness with TEEs. We won’t cover details in this piece but additional materials from Andrew Miller at Flashbots can be found here.

Before we explore the properties of TEEs, we provide a useful analogy in case you need to explain TEEs to your non-crypto friends. You can think of a TEE as being a Coca-Cola factory. Authorized ingredients are added and processed confidentially using the secret Coca-Cola recipe inside a ring-fenced production facility. Throughout this process, the recipe and manufacturing steps remain completely hidden, ensuring the final product is securely produced without revealing any secrets. Similarly, a TEE (the factory) ensures the recipe and process (confidential information) remain hidden and protected from external interference.

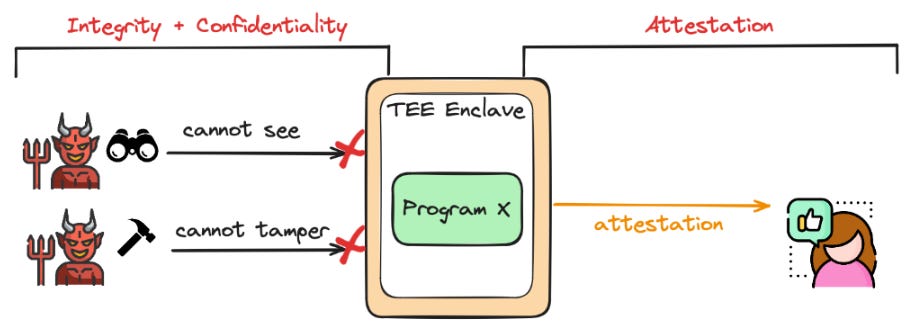

TEEs are unique compared to traditional computing environments due to their integrity and confidentiality properties. Integrity ensures the program which is being computed stays unchanged, removing the risk of tamper by the host nor creator. The second key property is confidentiality. Confidentiality allows the program in the TEE to operate with a secret state inaccessible to other processes. TEEs or enclaves guarantee that neither the host nor any other application can spy on the data or alter any computations inside the TEE.

Many TEEs also have attestation properties to offer additional trust guarantees. An attestation is a proof that enables the TEE to cryptographically prove to a remote party that a particular program running (or that has been run) has not been tampered with. Attestation further establishes trust in the TEE by demonstrating specific and tamper-free operations to third parties. We can think of attestations as proof of computational correctness, which can be verified by any independent party. In our Coca-Cola analogy, attestation could be thought of as a secure log book maintained by the factory, which records every step of the production process and can be independently verified to ensure the process was followed correctly.

“Thanks for telling me about TEEs, their properties and providing me with a useful analogy. But what is the real impact on current Crypto products?” we hear you say. Let’s briefly touch on some high-level examples to put the above into context.

Example 1: If we put a smart contract inside a TEE, privacy becomes inherent as everything inside the TEE is confidential by default.

Example 2: Putting an multi-party computation (MPC) inside a TEE enclave can ensure collusion-resistance because the TEE provides a secure and tamper-proof environment. This means that even if two or three parties in the MPC collude, they cannot access or alter the computations inside the TEE.

Example 3: Putting a Web2 front-end inside a TEE creates a trustless front-end since the TEE provides a secure and isolated environment. In this setup, the front-end code cannot be tampered with or altered by external parties, including the host server.

Thank you for asking, Bill Lumbergh from Office Space. So, let’s look into this!

Create an Enclave: A secure, isolated execution environment is established in a dedicated section of a processor.

Invoke Trusted Function: When a program needs to perform a secure operation, it uses the SGX call primitive (a secure call method developed by Intel). This secure call is a method that ensures the program's request to enter the enclave is authenticated and protected. This is also what happens in non-Intel TEEs, where similar mechanisms to the SGX call primitive are used to ensure secure invocation of trusted functions.

Enter the Enclave: The program passes through a secure entry point, known as the call gate, to enter the enclave. This process is reversible, allowing the program to exit the enclave after completing the secure operations. Typically, an enclave handles a single program or process at a time, but multiple threads or processes can run within the same enclave if they adhere to its security policies.

Execute Inside Enclave: The program's execution continues within the enclave.

Run Trusted Function: The trusted function is carried out by one of the enclave’s threads.

Return Results: After the function is completed, its result is encrypted and sent back to the program.

TEEs vs. ZKPs - the new Solana vs. ETH debate?

As is typical in crypto, the resurgence of TEEs has sparked widespread discussion and comparisons with Zero-Knowledge Proofs (“ZKPs”) in recent weeks (tweets from Anna Rose, RJ Catalan, Uma Roy, Esli Adiandhra). From a spectator's point of view, it has been exciting to see a renewed interest in technology paradigms. We won’t jump into this debate (ZK vs. TEE) in this chapter so feel free to check out the aforementioned tweets.

Regardless of whether you are using ZKPs or TEEs, there are trust assumptions either way. ZKPs require trust in the software and the underlying mathematics. Any flaws in the ZK-circuit or its implementation could unintentionally prove incorrect statements or expose sensitive information. On the other hand, TEEs rely on hardware-enforced isolation, requiring trust in the specific hardware setup. If the hardware is vulnerable to being compromised, the TEE cannot ensure the integrity and confidentiality of the data and processes within it.

The second thing we want to highlight is the cost of compute comparison between ZK and TEE. High-level, whilst ZK computation is 1,000-10,000x more expensive than basic computation, TEE computation is only about 10-20% more expensive. Removing this significant cost barrier opens the door to broader use cases.

AI is the topic of the day as we know. It is possible to put LLM scripts into TEE enclaves, ensuring that the LLM is not tampered with once it is deployed. This in and of itself is hugely significant as we can now empower individuals/businesses to create custom LLMs. Once in a TEE, the LLM script cannot be changed and all the data that enters the LLM remains confidential when it is being processed. Due to the integrity and confidentiality properties of a TEE, it is possible to create a trustless LLM. This is a solution which is currently being experimented by the team at Phala Network.

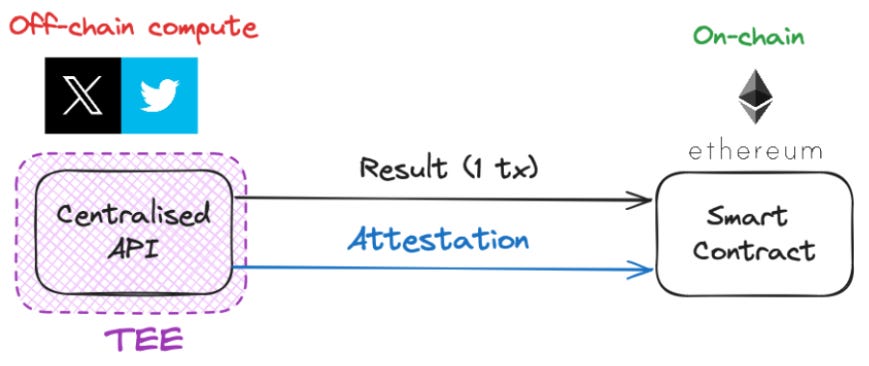

But rather than accepting this high-level statement on face value, we want to understand the details. Just like ZKPs produce a proof which is verified by a 3rd party, TEEs produce attestations which can be independently verified. This implies a 1) proving/attestation operation and a 2) verification operation.

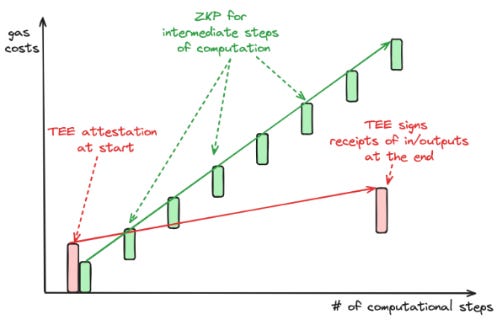

On the attestation side, TEEs require attestation only at the start and end of a computational process, verifying the integrity and correctness of the entire process with just two attestations. In contrast, ZK Co-Processors require proof for every computational step, with each incremental step needing individual verification to ensure overall correctness.

This difference becomes particularly significant with continuous operations. For example, proving that an address has never interacted with an OFAC-restricted entity like Tornado Cash using ZK Co-Processors would require creating an individual proof for each transaction, which as you can imagine becomes extremely expensive. In contrast, a TEE would provide an attestation at the start, confirming it will exclude addresses that interacted with Tornado Cash, and another attestation at the end, confirming the exclusion was maintained throughout the computation. This method is not only simpler but also more cost-effective as it avoids the need for multiple proofs, thus reducing the computational overhead.

Despite being able to verify proofs in under a second in both cases, the verification process for TEEs and ZK systems differs significantly in computational intensity. Using Ethereum gas to create an apples-to-apples comparison, ZK verification usually costs approximately 100k gas or more, per verification. TEE attestation verification however is a one-time cost per enclave usually in the range of 1-10m gas. The marginal verification cost per transaction in a TEE however is significantly cheaper at 2k-5k gas per verification. The tradeoff here is that TEE verification has high fixed costs but low variable costs, the inverse being true for ZK verification.

Verification for continuous operations is cheaper with TEEs above a certain number of transactions which makes TEE enclaves much more cost-effective for high-throughput applications that require low latency (e.g. LLM chatbots). For operations requiring continuous verification, the fixed cost of establishing a TEE may be preferable to the higher per transaction verification cost of a ZKP. A chatbot (high throughput) may be better suited for a TEE while a one-off computation like a “Proof of reserves computation” would likely see better performance from a custom ZK-circuit.

Now that we have covered the basics of TEEs, let’s dive into the advantages and disadvantages of TEEs and TEE Co-Processors.

Advantages:

Cost-Effective: TEEs are comparatively cheap, being only 20-30% more expensive compared to traditional compute (in contrast, ZK compute is 1000x more expensive).

Flexible: TEEs can be easily combined with other techniques like Fully Homomorphic Encryption (FHE), MPC, or ZKPs. While MPC and FHE have honest-majority assumptions, TEEs provide an alternative approach that can complement these methods, which when combined can offer more robust security at equally low cost. Mixing MPC with TEEs enables collusion resistance.

Simplified Data Provenance / Web2 Integration into Web3: TEEs offer a straightforward way to integrate Web2 data and oracles into Web3. Instead of transforming the entire data supply chain into a ZK-friendly format, you can set up a TEE to process off-chain/Web2 data, ensuring secure data handling without extensive reengineering.

Ease of Setup: TEEs are relatively easy to set up, requiring only basic technical expertise and traditional programming languages. They are also widely supported by Web2 hardware and software providers. In contrast, ZK compute demands a much deeper technical understanding. Previously, setting up ZK compute required knowledge of specialized ZK proving languages such as Cairo and Circom, although the introduction of compilers partly mitigated this.

Open-Source TEE Design: There is a strong focus on developing open-source to increase security standards among TEEs, which are expected to be widely available in the next 2-3 years. This will significantly enhance security by allowing a crowd-sourced community to audit and improve TEEs.

Mixing Web3 with TEEs: There are several projects (Circlesmoney’s Quartz and to some extent Flashbot’s Suave) which are working on ways to add a blockchain layer to the TEE stack to solve some of the pain points of (centralized) orchestration services. Orchestration services ensure that TEEs operate securely and efficiently by providing centralized management and oversight.

Disadvantages:

Vulnerability to Side-Channel Attacks: While TEEs provide strong protections for the program that is running inside it, there is a risk of side-channel attacks compromising signing keys. Side channel attacks exploit external information gathered from how a computer protocol or algorithm is implemented, rather than flaws in its design. Well-known side-channel attack vectors within TEEs include memory hierarchy where data caching creates fast and slow execution paths leading to timing differences. This can be exploited by attackers to infer sensitive information such as cryptographic keys. If you want to learn more about TEE side channel attacks, feel free to start here (Side Channel Risks in Hardware TEEs). This risk can be reduced by using TEE committees with diverse stacks from multiple vendors like Intel, AMD, and AWS.

Reputation Issues: TEEs have faced numerous security breaches in the past, such as SGAxe, Spectre, and the Downfall bug, which have severely undermined their reputation.

Risk of Liveness attacks: Like with any cryptographic verification method, TEEs are vulnerable to liveness attacks, which exploit a system’s reliance on the secure enclave's timely response. Disruptions can cause delays or denial of service, impacting the reliability of the system and potentially causing failures in authentication or execution of critical operations.

Case study: Marlin’s Oyster

Over the last couple of years, Marlin has established itself as one of the most established protocols in the decentralized compute space offering several ZK and TEE solutions for developers and protocols to enable secure off-chain compute. One of their core products, Oyster, is a TEE Co-Processor marketplace.

Oyster is a permissionless off-chain compute marketplace, enabling applications to outsource their computational tasks to external compute providers. Thereby, Marlin takes additional steps to ensure confidentiality and integrity by allowing compute operations to run in secure enclaves. Oyster offers two types of products: instance-based compute (for long-lasting operations) and serverless compute (for one-off operations). In the Oyster Instance marketplace, developers can now filter server parameters based on their preferences (vCPU, memory, geographical region, hourly rate) and outsource computational tasks (ML compute, back-ends, front-ends, MEV bots, etc.) to 3rd parties. All of this is offered via TEE enclaves guaranteeing computational integrity and confidentiality.

Oyster Serverless is the AWS Lambda of Web3. It allows functions to be executed and paid for on-the-fly without renting a dedicated instance. We now have a Web2 staple, natively accessible via crypto rails. Relay contracts seamlessly allow smart contracts to place computation requests and transmit responses which allows Oyster to efficiently serve dApps deployed on any blockchain. Furthermore, latent supply ensures that compute capacity can scale commensurate with demand.

Marlin relies on a PoS network for security requiring node operators to stake a certain amount of their native token $POND in order to prevent malicious behavior. This also allows anyone with a sufficient stake of $POND to offer their compute capacity, further decentralizing the infrastructure.

To address the liveness issues of TEEs head on, Oyster penalizes nodes for downtime and reassigns tasks to operational nodes if an issue occurs. Currently, all operators are required to stake $POND in order to participate though requirements continue to evolve in real time as Marlin recently entered a partnership with restaking protocol Symbiotic, an Eigenlayer competitor. Stay tuned for updates…

Case study: Phala Network

Phala Network focuses on the intersection of TEEs and AI through the development of “AI Co-Processors”. The primary objective of an AI Co-Processor is to enable developers to create AI-driven dApps that can seamlessly interact with blockchain services. At present, Phala Network has 36,000 attested TEE workers operating on their network, each of them operating secure enclaves. In addition, Phala runs a unique “AI cluster” consisting of 86 “vetted” workers, that execute an average of 700,000 smart contract calls per day and that support 4,700 AI contracts/scripts.

Phala’s core product is an AI agent contract, enabling the on-chain computation (inside a TEE) of an off-chain AI agent script. Within the script, developers can create and define their agent logic using TypeScript and JavaScript. Once the contract is deployed on Phala, it is automatically uploaded to a decentralized storage system such as IPFS. The script can include predefined settings such as reasoning prompts, execution prompts, custom agent rules, and the retrieval of external data sources. Additionally, developers can add confidential information to their AI script for secure processing. Then the script is executed inside a TEE through the Phala gateway, which downloads the program from its hash. Essentially, IPFS serves as a repository for code storage, while the TEE acts as the executor.

The AI agent script remains off-chain, with execution occurring inside the TEE, specifically within the AgentVM. If one secure off-chain worker is unavailable, another worker within Phala Network’s AI cluster takes over the execution. Phala Network’s TEE AI agent interplay not only enhances the capabilities of AI-driven dApps but also ensures that these applications operate securely and efficiently within a decentralized ecosystem.

Case study: Automata’s multi-prover partnership with Scroll and Linea

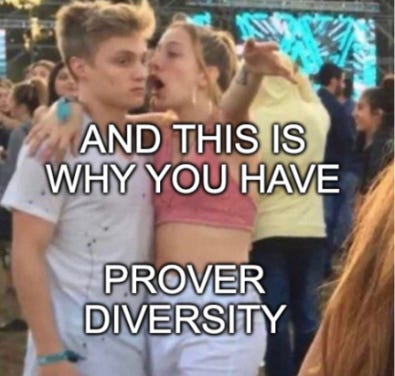

Automata is one of the most active companies within the TEE Web3 space. The team has launched numerous innovative products that combine off-chain compute with on-chain actions. One of their most exciting projects is the multi-prover, developed in collaboration with Scroll and Linea, which looks to enhance security for Ethereum-based ZK Roll-Ups.

High level, this multi-prover approach involves adding a TEE prover to the original ZK prover which significantly increases the reliability of the proving process. Due to their complexity, existing ZK provers are prone to system exploitations such as proving false transactions, double-spending, and incorrect state updates. By introducing a secondary TEE prover, Automata provides an alternative to verify state transitions and thus offers greater confidence and robustness in ZK-systems. In this scenario, TEE-based attestations mitigate the systemic vulnerabilities in zk(E)VMs and the implementation reduces the risk of exploitation in a zkEVM soundness bug.

Another advantage of Automata's approach is the verifiable trust native to TEEs. Proof generation occurs within an isolated execution environment protected against unauthorized access, and attestation verifies the integrity of the secure enclave, maintaining trust in the proof system. Additionally, efficiency is a key benefit. While generating zero-knowledge proofs can be time and resource-intensive, SGX proofs are less demanding, which improves overall efficiency.

How the TEE multi-prover works for Scroll:

Submitting Batched Transactions: The Scroll sequencer submits batched transactions to the Ethereum L1 contract.

Creating ZKP: The Scroll zkProver processes the transactions and creates a ZKP.

Submitting ZKP to Ethereum: The Prover of Scroll sends the ZKP to the verifier contract.

Previously, the aforementioned steps represented the standard approach relying on a sole ZK prover which presented a single point of failure. With the multi-prover partnership through Automata, an additional off-chain proving process occurs simultaneously:

Enclave Creation: A TEE is created to securely handle computations.

Creating Proof of Execution: The TEE Prover re-executes the batched transactions committed by the sequencer to the L1 contract, ensuring they match the expected state transition.

Submitting TEE Proof to Verifier: The TEE Prover submits the Proof of Execution (PoE) to the TEE Verifier.

Remote Attestation: The remote attestation process verifies the integrity of the TEE Prover's execution environment, establishing trust in the on-chain results.

In order to facilitate the deployment of their TEE multi-prover, Automata is launching an EigenLayer AVS (we will touch on this later on). This Automata AVS will provide easy access for protocols to additional TEE attestation services by professional operators like P2P and Allnodes.

Crypto-Economic Co-Processor (the Trust Broker)

With ZK, TEE and OP Co-Processors, we rely on cryptographic means to prove the correctness of off-chain computation. However, Ethereum and other proof-of-stake (PoS) systems introduced the concept of Crypto-Economic security. Trust by accountability, trust by economic stake.

Eigenlayer has established a new vertical by creating a marketplace for decentralized trust through its restaking mechanism (recommended reading: Restaking and the price of trust). This innovative approach allows Ethereum staked collateral to be “restaked” in order to secure dApps for whom it is more efficient to outsource security to a 3rd party rather than bootstrap a validator network from scratch. These are known as Actively Validated Services (“AVS”) and this marketplace allows AVSs to rent “decentralized trust” from external providers.

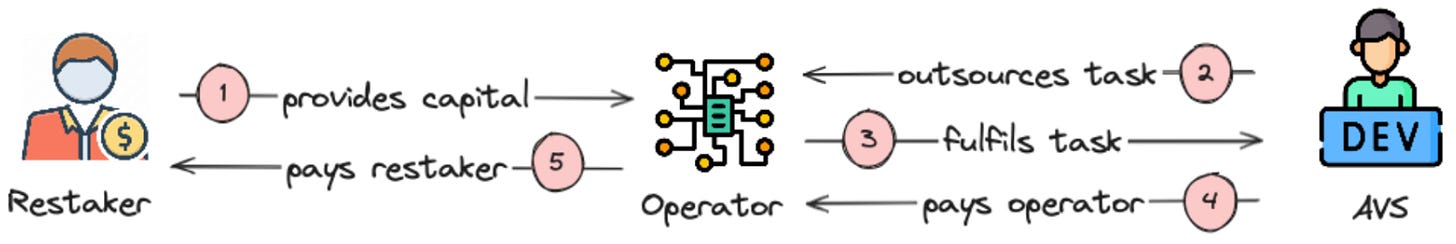

While the concept of restaking is broadly discussed, recent conversations have led us to believe that very few people really understand the intended (and unintended!) consequences and risks of restaked collateral. Let’s look at it from a first principles perspective. Providing trust is an interplay of three different parties: 1) an AVS, which requires a “task” to be executed; 2) a professional compute operator who is executing the “task”; 3) ETH restakers who provide economic collateral to ensure that the “task” is not compromised or hijacked by malicious actors.

These 3 parties use each other for different purposes. The AVS is outsourcing a “task” to an operator. Once the task is fulfilled, the operator gets paid by the AVS. As the operator gets paid, their restakers are also paid. The more economic security (defined as the dollar value of restaked assets) these operators can attract, the more “secure” the job is. The more complex the task is, the higher the yield expectations of the operator. While Eigenlayer and other restaking services has taken the world by (hype) storm, not many care to ask 4 fundamental questions:

What tasks do you need to outsource to operators? (task outsourcing)

How do you pay for trust? (payment methodology)

How much do you pay for trust? (yield)

What is the minimum viable and optimal amount of trust? (matching trust demand & supply)

As with most complex questions, the answers are nuanced and largely depend on the AVS and the tasks they are outsourcing. We would like to go into two brief examples.

Lagrange - ZK Co-Processor AVS: Lagrange’s ZK Co-Processor outsources the job of proving the liveness of their ZK prover to operators and restakers. Currently, they have attracted economic security worth $7 billion, which would typically require substantial nominal yields paid to operators and re-stakers. Even a 1% yield would cost $70 million annually, raising questions about the economic feasibility. These could range from USDC/ETH for profitable protocols, to native token inflation for unprofitable ones.

In Lagrange’s case, given there is currently no slashing enabled on Eigenlayer (meaning no actual risk for stakers), and the task of proving liveness is relatively straightforward, the actual payment to operators and restakers is minimal, and far below the expected cost of 1-2% per annum. Even once slashing is enabled, most consensus designs may not need it, as sanctioning against economic rewards is often sufficient. This means that instead of slashing the operator, Eigenlayer can withhold operator/restaker rewards or prevent them from withdrawing their assets for a couple of weeks.

Automata - multi-prover AVS: Automata has launched their multi-prover AVS which will provide Roll-Ups with easy access to TEE attestation services run by professional operators. In Automata’s case, every professional operator is running their own TEE enclave (i.e. P2P runs Intel SGX, Allnodes runs Nvidia confidential compute). Rather than bootstrapping a network of various TEE providers and guaranteeing their compute correctness and integrity, Automata simply outsources the TEE computation task to external operators. While this service seems to be more fundamental to the business model than guaranteeing liveness, we do not expect Automata to pay more than 2-3% to operators and restakers. In fact, we are eager to see the actual nominal amount that will be restaked to Automata and the corresponding yield equilibrium.

To summarize, both examples show that AVSs are superior for straightforward functions such as liveness guarantees and suboptimal for computationally intensive functions such as real-time proving or TEE computations.

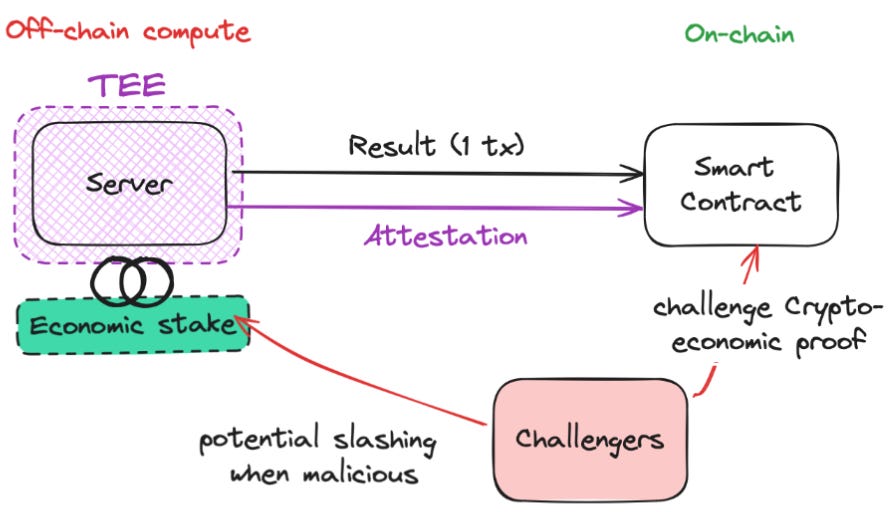

Now that we understand the dynamics of restaking and the potential caveats for AVSs, let’s touch on what a Crypto-Economic Co-Processor could look like. In this construct, an restaking operator fulfills a task off-chain and subsequently posts the result on-chain. To ensure accuracy, a challenger can submit some form of fraud proof.

Let’s dive into the advantages and disadvantages of using Crypto-Economic verification for Co-Processors.

Advantages:

Temporarily Cost-Effective: Today - without slashing and any required payments from AVSs to operators and restakers - Crypto-Economic trust is the most economical computing solution available (free). However, as slashing goes live and restakers begin requiring more substantial payment, Crypto-Economic trust will become increasingly more expensive.

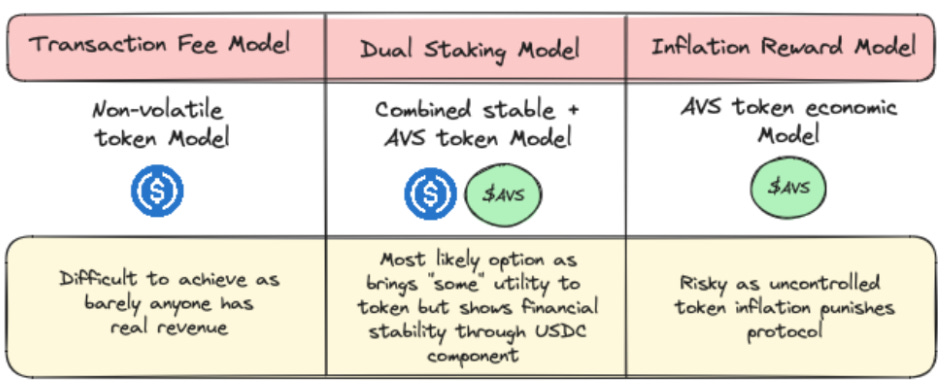

Flexible Payment Models: AVSs can choose from various payment models for trust providers. Options include paying in stablecoins, native tokens, a combination of both or ETH and other base layer tokens. Flexible payment options are an advantage as they allow AVSs to subsidize payments through native tokens in early days.

Speed of “achieving trust”: Instead of building complex verification systems, Crypto-Economic trust provides a plug-and-play solution and significantly reduces the time-to-market.

Speed of “computation”: Crypto-Economic trust providers offer a 'fast-lane' mode where operators quickly verify execution and attest to its correctness. Unlike ZKPs and Optimistic Fraud Proofs, this allows applications using Crypto-Economic Co-Processors to process their output immediately after verification.

Liveness: Every cryptographic verification method can suffer from liveness attacks which in turn can create delays or denial of service. By relying on a restaking service for liveness, one can increase operational security and ensure the system remains responsive.

So how does Crypto-Economic security actually ensure protocol liveness? Protocols outsource liveness guarantees to operators by implementing economic incentives and penalties. For instance, ZK Co-Processor Lagrange outsources its ZK prover to a network of 70+ provers run by different restaking operators. Each proof is divided into smaller parts, processed in parallel, and then combined. If an operator fails to deliver their part of the proof, they face penalties such as slashing or loss of rewards. This financial deterrent encourages operators to maintain multiple provers to guarantee liveness, as seen with restaking operator Puffer running 10+ provers. By outsourcing these guarantees, protocols shift the responsibility of maintaining liveness to the operators, who are economically motivated to ensure fluid operation.

Unknowns:

Payment design is unproven: Most protocols do not have sustainable income streams to cover payments in stablecoins to operators and restakers. As a result, many AVSs will initially rely on native token inflation for payments, which is unsustainable in the long-run.

Unproven design: While all mechanics work on paper, they have yet to be battle-tested in the real world.

Unclear slashing risk: The absence of clarity around slashing mechanisms introduces uncertainty, specifically around the possibility of slashing cascades.

Additional economic complexity for protocols: Restaking services were initially designed to allow protocols to focus on product development rather than bootstrapping their own economic trust networks. Instead, restaking services protocols still face economic complexity in determining the optimal amount of economic security, the corresponding yield, and the most appropriate payment model.

We hear you, Bernie! So let’s explain Crypto-Economic Co-Processors by looking at Fhenix.

Case study: Fhenix’ FHE Co-Processor

One of the most hyped areas of research in H1/2024 has been fully homomorphic encryption (“FHE”). On the off-chance you find yourself having to explain FHE to the person sitting next to you at dinner, we will briefly touch on the basic concepts. For details, check out Zama’s intro to FHEs here. FHE allows computation over encrypted data while the data remains encrypted. Current solutions decrypt, run computation and then re-encrypt. With FHE data remains encrypted throughout the entire process.

Because of its complexity, FHE compute solutions in the last decade have been comparatively slow. Many deemed it “a bridge too far”, hardly imagining this technology could one day have real-world applications. However, with recent improvements in high performance compute hardware, this perception has changed dramatically.

Back to the case study… As one of the most active companies in the FHE space, Fhenix is building two products which offer FHE services to developers: (1) a stateful L2 and (2) a stateless Co-Processor. The goal at Fhenix is to provide developers a suite of tools, resources, and infrastructure to seamlessly build with FHE.

The protocol initially envisioned the creation of its own Layer 1 but it found that replicating FHE computation and consensus over encrypted data was too expensive. The team at Fhenix also faced issues around consensus verification. As a result, they are now building an FHE Layer 2 on Ethereum using the Arbitrum stack to create an encrypted Optimistic Roll-Up. However, Arbitrum's seven-day dispute period is impractical for FHE applications. In order to address this challenge, Fhenix is using EigenLayer to Crypto-Economically secure a “fast-lane” mode. This allows applications using Fhenix’ L2 to immediately utilize their output after verification.

In addition, Fhenix is developing an FHE Co-Processor. Since a Co-Processor is stateless there is no need for applications to commit to a state, allowing for one-off computation unlike the Fhenix L2 solution where applications need to commit to a state.

This highlights a significant advantage of Co-Processors: they offer the flexibility for developers to leverage different capabilities (i.e. FHE) without the need to commit state on an L1 or L2, thereby providing more efficient and cost-effective options for specific use cases.

Let’s dive into the details to really understand how the architecture works in practice:

Request Initiation: An application contract requests an encrypted computation on the FHE Co-Processor.

Queuing the Request: Fhenix’s relay contract queues the request.

Relaying the Request: A relay node listens for events in the relay contract and sends the request to the stateless FHE Co-Processor.

Executing the Computation: The FHE Co-Processor performs the computation over the encrypted inputs.

Sending Encrypted Computation: The FHE Co-Processor sends the encrypted computation to Fhenix’s network.

Decrypting the Output: Fhenix’s network decrypts the computation output.

Providing Crypto-Economic Proof: Fhenix-assigned Eigenlayer operators confirm that the compute execution was done correctly.

Returning Results: The relay node calls back the decrypted computation and the Crypto-Economic proof of correct computation.

Verifying the Proof: The relay contract verifies the proof and sends the result to the application.

Receiving the Results: The application contract receives the results.

Comparing different verification methodologies

While each approach has tradeoffs, we believe that the true magic lies in the combination of multiple approaches.

The choice of verification method should be driven by the specific needs of the application and its users. This is akin to the Web2 world where Facebook and Twitter rely on proprietary servers for data protection reasons, while Netflix and Spotify use cloud providers in order to mitigate capital expenditure.

In practice, a mixture of different verification methods will likely be used. Combining these methods can provide a balanced approach that leverages the strengths of each while optimizing for the desired level of security, performance, and cost-efficiency.

Combining different verification methodologies

No existing verification methodology is perfect and developers must proactively decide which tradeoffs they want to optimize for.

TEE/ZK and Crypto-Economics:

Programs running inside a TEE benefit from confidentiality and integrity throughout execution even if the surrounding system is compromised. TEEs enable secure computation, data privacy, and even confidential smart contracts. TEEs as well as ZK circuits are equally prone to liveliness failures. These attack and risk vectors can be mitigated by adding Crypto-Economic security into the verification mix as we have explained earlier on.

We have seen an implementation of TEE and Crypto-Economics in Automata’s multi-prover AVS. As a recap, Automata operates an additional TEE prover alongside Scroll’s and Linea’s ZK prover. Over the last months, Automata has significantly expanded this initiative by creating a multi-prover marketplace in the form of an AVS.

With the multi-prover AVS, all Layer 2s can use Automata’s multi-prover for additional TEE proofs by selecting from various TEE providers. This is a great example of how one verification method (TEEs) can supplement another for enhanced security.

TEE and ZK:

We have also seen (in some cases theoretical) combinations between TEEs and ZKPs.

ZK inside TEE (“TEE-ed ZKs”): Placing a ZK custom circuit or zkVM inside a TEE ensures computation remains secret and secure. This method combines TEE confidentiality with ZKP proof capabilities. This is useful for applications needing high privacy and security, such as financial transactions or private data analysis.

Recursive ZKPs with TEE attestations (“zk-fied TEEs”): Recursive ZKPs compress multiple TEE attestations into a single ZKP, reducing verification costs. Each TEE performs its attestation, and these are aggregated into one ZKP.

Identification of bad TEE attesters: ZKPs can monitor and verify TEE attesters' performance, identifying unreliable ones.

This brings us to the end of Chapter 3, congrats if you made it to this point!

Next…

In the next chapter, we will (finally) come to the most actionable section of our Co-Processor report and discuss actual use cases both inside and outside the Crypto sphere.

About Florin Digital

General Disclaimer

Florin Digital and its affiliates make no representation as to the accuracy or completeness of such information listed herein. These materials are not intended as a recommendation to purchase or sell any commodity, security, or asset. Florin Digital has no obligation to update, modify or amend this material or to otherwise notify any reader thereof of any changes.

This presentation and these materials are not an offer to sell securities of any investment fund or a solicitation of offers to buy any such securities. Any investment involves a high degree of risk. There is the possibility of loss, and all investment involves risk including the loss of principal.

Florin Digital LP and its affiliates may currently hold positions in tokens mentioned in this report, may have at one time held positions in tokens mentioned in this report, and may enter into spot, derivative, or other positions related to tokens mentioned herein.

Anything mentioned here should not be interpreted as a recommendation to purchase or sell any token, or to use any protocol listed, described, or discussed. The content of this report reflects the opinions of its authors and is presented for informational purposes only. This is not and should not be construed to be investment, tax, or legal advice.